You can listen to a song a hundred times and never really hear it. Then a random fluke of fortune changes everything. On the 101st time it finds its way through and it resonates.

There is an inspired serendipity to my life. A theme that underlies all that I have done, every decision I have made and every path I have traveled. On Halloween a year ago, that instinct came alive and my wandering found a home.

A

Sunday, October 31, 2010

Simple Math and Simple Politics

If you spend anytime at all perusing the blogosphere, you will find a common theme coming from self-described liberal or progressive bloggers, and that is that those on the political right are ignoramuses. The argument is that they are just too stupid to know what's what - they are even anti-science, rejecting knowledge itself -- and consequently they support dumb candidates advocating ignorant policies. Such arguments are particularly evident in the corner of the blogosphere that discusses the climate change issue. This line of argument of course is a variant of the thinking that if only people shared a common understanding of scientific facts they would also share a common political orientation (typically the political orientation of whomever is expressing these views).

Today's New York Times explains that top Democrats, including Barack Obama and Bill Clinton have bought into this view, leading to charges of elitism from their political opponents. Here is an excerpt:

But for the purposes of discussion, let's just assume that those on the political right are in fact ignoramuses. Even if that were the case, appeals to the wisdom of the educated (and the stupidness of others) would still be a losing electoral proposition as shown by the graph at the top of this post (data here in XLS): Americans older than 18 registered to vote with a college degree represent only 32% of the voting population. Those with an advanced degree represent only 11% of the population registered to vote. For those smart folks on the left, I shouldn't have to explain the corresponding electoral implications.

It should also be fairly obvious that when highly educated people tell those who are less educated that they are too stupid to know better, it probably does not lead to acceptance of claims to authority, much less reinforce trust in experts. In fact, it might even have the opposite effect.

For those on the left who spend a lot of time explaining how intelligent they are, their politics are not always so smart.

Today's New York Times explains that top Democrats, including Barack Obama and Bill Clinton have bought into this view, leading to charges of elitism from their political opponents. Here is an excerpt:

In the Boston-area home of a wealthy hospital executive one Saturday evening this month, President Obama departed from his usual campaign stump speech and offered an explanation as to why Democrats were seemingly doing so poorly this election season. Voters, he said, just aren’t thinking straight.And here is what the NYT reports about Bill Clinton expressing similar views:

“Part of the reason that our politics seems so tough right now, and facts and science and argument does not seem to be winning the day all the time, is because we’re hard-wired not to always think clearly when we’re scared,” he told a roomful of doctors who chipped in at least $15,200 each to Democratic coffers. “And the country is scared, and they have good reason to be.”

The notion that voters would reject Democrats only because they don’t understand the facts prompted a round of recriminations — “Obama the snob,” read the headline on a Washington Post column by Michael Gerson, the former speechwriter for President George W. Bush — and fueled the underlying argument of the campaign that ends Tuesday. For all the discussion of health care and spending and jobs, at the core of the nation’s debate this fall has been the battle of elitism.

Former President Bill Clinton has a riff in his standard speech as he campaigns for Democrats in which he mocks voters for knowing more about their local college football team statistics than they do about the issues that will determine the future of the country. “Don’t bother us with facts; we’ve got our minds made up,” he said in Michigan last week, mimicking such voters.The problem with such arguments is that they are simply wrong, Facts do not compel particular political views, much less policy outcomes.

But if they understood the facts, he continued, they would naturally vote Democratic. “If it’s a choice and we’re thinking, he wins big and America wins big,” Mr. Clinton told a crowd in Battle Creek, pointing to Representative Mark Schauer, an endangered first-term Democrat.

But for the purposes of discussion, let's just assume that those on the political right are in fact ignoramuses. Even if that were the case, appeals to the wisdom of the educated (and the stupidness of others) would still be a losing electoral proposition as shown by the graph at the top of this post (data here in XLS): Americans older than 18 registered to vote with a college degree represent only 32% of the voting population. Those with an advanced degree represent only 11% of the population registered to vote. For those smart folks on the left, I shouldn't have to explain the corresponding electoral implications.

It should also be fairly obvious that when highly educated people tell those who are less educated that they are too stupid to know better, it probably does not lead to acceptance of claims to authority, much less reinforce trust in experts. In fact, it might even have the opposite effect.

For those on the left who spend a lot of time explaining how intelligent they are, their politics are not always so smart.

Graph Your Halloween Candy!

Jedi Master say...

Fastest graph, EVER! ;)

Jedi Masters need chocolate, too. Think the Jedi would notice if a piece of his graph disappeared? ;)

"A good Jedi must always graph his Halloween candy before eating it. May the force be with you!!"

Fastest graph, EVER! ;)

Jedi Masters need chocolate, too. Think the Jedi would notice if a piece of his graph disappeared? ;)

Bill Maher disturbed by Islamization

Allah Save the Queen!

I think it is a pretty scary symbol of the direction that the demographics of many Western countries have taken. In his TV show, Bill Maher revealed that he is worried, too. His worries were confirmed and strengthened when he learned that the Sharia Law is already used as a parallel system of courts in the U.K.

Juan Williams was recently fired from NPR just for stating that he gets nervous if his fellow airline passengers are dressed in a Muslim way. So do I and I think that most non-Muslims do - but I also start to think about the best ways to do "let's roll" in the case that it's needed. :-)

Well, I actually have the same thought even if I see an Arab; the Muslim garb is not the real issue because e.g. all the 9/11 hijackers were dressed in Western clothes. But I suppose that if Williams said that it was the Arab looks that made him worry on the airplane, it would be even worse for the PC police. So the criticism that he doesn't appreciate that the hijackers try to be invisible is really hypocritical.

His is a completely rational reaction: if a sufficiently assertive Muslim is on board, the probability of critical problems with the aircraft significantly increases: just compute it. It doesn't matter whether you associate the elevated risk with the religion or the race because in most cases, the two criteria coincide: it's more important that the risk is elevated. It's bad if NPR wants to punish the people - and its own people - for reacting rationally.

In the past centuries, our ancestors would fight against the Muslims and the expansion of the Turks and other nations to Europe. I don't think that there was anything wrong about this defense. They may have lived in feudalism and their science was much more primitive but they were right about this point.

The political correctness transforms millions of people in the West to building blocks of the fifth column of jihad. And it's worrisome for those of us who think that the Islamic societies are not as human and desirable as the Western ones.

Click to zoom in.

One must realize that different countries are influenced to a completely different extent. The map above shows that while Norway, Sweden, Baltic states, Poland, Czechia, Slovakia, Hungary, Slovenia, Romania, Italy, and a few others remain below 1% of the Muslim population, France is already near 10% and other large EU countries such as Britain, Spain, Germany, Benelux are approaching 5% or so.

A combination of immigration and the natality gap may make the Muslims a majority in 2 generations - in countries such as France. And maybe one generation. Immigration represents 85% of the growth of the European Muslims right now. The previous link also suggests that the Muslim population has the potential to double within a decade. With this rate, France needs just 20 years or so to become a dominantly Muslim country.

Newborns in Czechia

Just for fun, it's interesting to see what are the most frequent names in a country of "infidels" such as Czechia. I find it kind of shocking, in a very positive way. The data are from early 2010.

One can see that the popular names are changing with time. The most frequent names of fresh mothers - which reflects the popular names of newborn girls in the 1970s and 1980s, i.e. late stages of socialism - are

1. Jana [Jane], big gap,They're the most likely names to attract adult men these days. ;-)

2. Petra

3. Lenka

None of them is too popular for the newborns today. In fact, only Kateřina [Catherine], Lucie [Lucy], and Veronika [Veronica] appear in the intersection of the frequent names of mothers and daughters.

The most frequent names of fresh fathers are

1. Petr [Peter], big gap,The intersection of fathers and sons is dominated by Jan [John], Tomáš [Thomas], and David. Note that these are pretty civilian names in average: the last three names are among the four members of the Beatles (we don't use Ringo here). But what is kind of fascinating are the current frequent names of newborns.

2. Martin

3. Jan [John]

4. Jiří [George]

5. Pavel [Paul]

Click to zoom in.

The girls are clearly led by Tereza [Theresa] which is a pretty Christian name if you remember Mother Theresa. This dominant name is followed by Anna [Anne] - which is very old-fashioned in the Czech context and people used to think it was going extinct two decades ago. The bronze medal goes to Eliška [Elise]. Karolína and Natálie [Caroline and Natalia] follow.

All these names are pretty old-fashioned. If you say "Karolína" or "Eliška" to people of my generation, we usually think of "Karolina Světlá" and "Eliška Krásnohorská", two (largely) 19th century Czech female writers who were kind of feminists. OK, sorry, I don't want to insult Světlá who wasn't really a feminist. ;-) Feminism in the Czech lands peaked in the 19th century; at that time, the culture was not yet sufficiently advanced for the women to realize that feminism was a shallow trap they had to overcome (which they eventually did).

But this return to the roots is nothing compared to the currently most frequent newborn boys' names.

Click to zoom in.

The leadership was regained by Jan [John], followed by Jakub, Tomáš, Lukáš, Filip [Jacob, Thomas, Luke, Philip]. Vojtěch, Ondřej, David, Adam [Albert, Andrew, David, Adam] are in the top ten, too.

It's even more remarkable if you look at the top 3-4 lists in the regions. Take the Pilsner region, for example - the second one from the West. You see:

1. Matěj [Matthew]Now, recall that the four gospels were written by Matthew, Mark, John, and Luke. Three of the four names are among the top four most frequent newborn boy names in the atheist Pilsner region. Recall that only 1-2% of the Pilsner region attends the church regularly. Isn't it funny?

2. Jakub [Jacob]

3. Jan [John]

4. Lukáš [Luke]

(OK, I am improving the story a bit by not mentioning that "Matěj" is a 2nd-layer Czech variation of Matthew. The author of the gospel is actually referred to "Matouš" which is itself a newer version of an even older name, "Matyáš". All these variations are still being used.)

Mark is also relatively frequent but no longer in the top ten. Instead, among the authors of gospel, Mark was replaced by Jacob who didn't write his own gospel but he was at least the third patriarch of the Jewish people, a leader also known as Israel. ;-)

There are lots of names that people could have chosen if they wanted their baby to have non-religious names - like Luboš or Markéta, to mention two examples haha. About 200-300 names are being used each month: see a calendar with the name day we celebrate each day. (The out-of-calendar names recently given to babies include curiosities such as Chloe, Ban Mai, Megan, Uljana, Gaia, Graciela, Malvína, and Ribana for girls - as well as Abdev, Dean, Ronny, Timothy, Diviš, Kelvin, Lev, and Maxián for boys.)

But that's not what's happening. So I would say that despite the widespread atheism, the inclination of the people to preserve the Christian roots and traditions is significant. I guess that if the proportion of the Muslims grew above 2% and they would cause 10% of the problems they cause in France, parties that wouldn't consider the Islamization to be a sensitive problem and a potential threat would be quickly be moved to the political fringe of my homeland.

However, it's not 100% guaranteed that the current evolution of the European Union will actually allow us - and other European nations - to decide about these existential questions such as the regulation of immigration flows.

The main "active" promotion of the harmful developments probably comes from France. Germany has had a lot of workers from Turkey - for various reasons. The Germans used to think that the Turks stayed in Germany temporarily. Time was needed to show that this expectation was wrong. The Germans seem to realize that "multi kulti", as Angela Merkel and others call it, doesn't work.

This satirical map of Europe in 2015 shows the dominant Muslim nations that are gradually overtaking individual countries.

However, France seems to be actively supporting the rise of Islam in Europe already today.

Saturday, October 30, 2010

NASA offers you life in prison: on Mars

NASA's Ames Research Center is working on its "Hundred-Year Starship" project that will sell you a one-way ticket to Mars:

Pop Sci, Google News, GoogleThis job is clearly not appropriate for losers. It's kind of dramatic: NASA needs to save some money. The return trip is more expensive, roughly by a factor of five or so, and NASA wants to save the money by sending settlers who have the balls to do the unthinkable.

Would you have the courage and desire to spend the rest of your life in a space suit in between red stones, living at permanent risk that the vital technology may stop working at any moment? Would you be eager to work for some hypothetically brighter future of the people who continue to live on the blue planet rather than the red one?

If you wouldn't but if you accept that they will surely find someone who will be ready to participate, do you have any moral complaints against the plan?

I wonder how the life - and its end - would work in the absence of hospitals, hospices, good entertainment centers, classical restrooms, and millions of other things. Could the job be safely done by prisoners or terrorists? It's surely a topic for a very emotional movie.

NASA expects such an expedition to be there before 2030.

Math App Saturday (#14 Motion Math-Fractions)

Based on the recommendation at Mathwire, I BOUGHT a fraction game math app, Motion Math. First time I've EVER paid for an app. Shelling out 99 cents about killed me. ;)

Here's how it works:

To play, you tilt your device (iPad, iTouch, or iPhone) so that a fraction ball falls onto the correct place on a variety of number lines. It starts fairly slow. Three levels to choose from. If you need help, you get prompts.

What I like:

It's fun. I gave it to my 8yo son to try. He's had very minimal formal exposure to fractions. When he didn't know a fraction, the game slowly gave him hints, filling in parts of the number line, giving an arrow to indicate the direction he should try, etc. When I asked how much he enjoyed it, he replied, "Good." Granted, it's a new game, but he's been playing it now for more than 20 minutes without wanting to stop.

The game uses a variety of number lines (including negative numbers), mixed fractions, decimals, both number and pictorial versions of fractions. It provided good fraction practice.

Issues I experienced:

Issues I experienced:

I didn't have any problems with the initial games. After you've mastered several levels, however, you're given an exercise where the ball must fall into a less than, equal to, or greater than, slot. My iTouch did not react in a timely manner. It was VERY difficult for me to get the ball into the correct category, even when I immediately knew the answer. As the levels got harder, this particular exercise produced falling numbers faster than I was able to see them...and since my machine was already reacting slowly at the easy levels, the harder levels were impossible.

I enjoyed the regular bouncing ball exercises and could mostly control where they fell. I was sometimes frustrated in my ability to get the machine to tilt so that the ball fell where I wanted it to; I thought that might be due to my own inexperience with app games. So I gave it to THE. APP. MASTER. My 14yo son. He agreed that it reacted slow...jerky. But the falling ball parts were pretty good...it was mostly the less than/equal to/greater than parts that my machine struggled to accommodate.

I don't think I'll be able to master the game due to the difficulty of getting the app to respond. But I made it to the second to the top level on my first try. Not bad. And I had fun doing it.

In summary:

The app was worth 99 cents. I've found free apps that I like almost as well; IF the app had responded better to my movement, I would have felt different.

Have you tried it? What do you think?

Update 1/2011: We've had this game now for several months and my 8yo son has been able to pass my level of play. In talking about fractions the other day, I was shocked at how much my son was able to articulate. He said it was because of this game and "because we talked about how Mary and Laura shared 2 cookies with their sister, Carrie." (Little House books) Go figure. ;)

Here's how it works:

To play, you tilt your device (iPad, iTouch, or iPhone) so that a fraction ball falls onto the correct place on a variety of number lines. It starts fairly slow. Three levels to choose from. If you need help, you get prompts.

What I like:

It's fun. I gave it to my 8yo son to try. He's had very minimal formal exposure to fractions. When he didn't know a fraction, the game slowly gave him hints, filling in parts of the number line, giving an arrow to indicate the direction he should try, etc. When I asked how much he enjoyed it, he replied, "Good." Granted, it's a new game, but he's been playing it now for more than 20 minutes without wanting to stop.

The game uses a variety of number lines (including negative numbers), mixed fractions, decimals, both number and pictorial versions of fractions. It provided good fraction practice.

Issues I experienced:

Issues I experienced:I didn't have any problems with the initial games. After you've mastered several levels, however, you're given an exercise where the ball must fall into a less than, equal to, or greater than, slot. My iTouch did not react in a timely manner. It was VERY difficult for me to get the ball into the correct category, even when I immediately knew the answer. As the levels got harder, this particular exercise produced falling numbers faster than I was able to see them...and since my machine was already reacting slowly at the easy levels, the harder levels were impossible.

I enjoyed the regular bouncing ball exercises and could mostly control where they fell. I was sometimes frustrated in my ability to get the machine to tilt so that the ball fell where I wanted it to; I thought that might be due to my own inexperience with app games. So I gave it to THE. APP. MASTER. My 14yo son. He agreed that it reacted slow...jerky. But the falling ball parts were pretty good...it was mostly the less than/equal to/greater than parts that my machine struggled to accommodate.

I don't think I'll be able to master the game due to the difficulty of getting the app to respond. But I made it to the second to the top level on my first try. Not bad. And I had fun doing it.

In summary:

The app was worth 99 cents. I've found free apps that I like almost as well; IF the app had responded better to my movement, I would have felt different.

Have you tried it? What do you think?

Update 1/2011: We've had this game now for several months and my 8yo son has been able to pass my level of play. In talking about fractions the other day, I was shocked at how much my son was able to articulate. He said it was because of this game and "because we talked about how Mary and Laura shared 2 cookies with their sister, Carrie." (Little House books) Go figure. ;)

Friday, October 29, 2010

Bacon & Cartoons: Friday Sharia & Fatwa Round-Up

According to a story by Brian Montopoli of CBS News, it would seem that America has little to fear from the brutality of Islamic Sharia law; no, the danger is posed by conservative constitutionalists who are concerned by the efforts of Muslims to impose their religion on the 99 percent of Americans who do not share their religion. Montopoli begins his scree with the declaration:The rise of anti-Muslim sentiment in America has brought with it a wave of largely-unsubstantiated suggestions from conservative media commentators and politicians that America is at risk of falling under the sway of Sharia Law.

Sharron Angle has now addressed the national flap over her suggestion that Sharia law might be a threat in certain American cities, and while she seemed to try to disavow the claim, I'm not sure her clarification will clarify all that much.Sharron Angle on Sharia law in America: "That's what I had read"

Angle was asked about her comments during an exchange in the safe confines of conservative radio host Lars Larson's show. She has been attacked by the Mayor of Dearborn, Michigan, one of the cities she discussed during her earlier discourse on Sharia law, and Larson served up Angle a great big meatball, giving her a chance to distance herself from the mess.You tell me if she took the bait:LARSON: Hey Sharron, let me ask you about something that it sounds like the City of Dearborn Michigan is going after you because of some things you've said about Sharia law. Why don't you tell people what you really said and tell me what your response is to the Mayor of Dearborn.

ANGLE: Well, what I had been hearing was that there would be quite a ruckus there because of what's going on in Michigan in response to Sharia law and people that think that Sharia law should be in place here in the United States. So of course I didn't intend to offend anyone with my remarks. It's just that people are quite nervous about the idea of having anything but a Constitutional republic here in the United States.

LARSON: Now did you say though that Sharia law was in place in Dearborn right now?

ANGLE: I had read that in one place, that they have started using some Sharia law there. That's what I had read.

Oklahoma's Preemptive Strike Against Sharia Law

Will anti-Sharia law initiatives be in future election cycles what anti-gay marriage initiatives were before? That is, a cultural wedge issue the GOP uses to ensure that hard-core conservatives enthusiastically flock to the polls?

Drive-through Fatwas

(Filed by: Storm Bear July 20, 2007)

I was driving a bunch this last week and was listening to world news podcasts on my iPod instead of hate radio. One story was about Dar Al-Iftaa and their fatwa issuing machine.

Fatwas are in reality just religious rulings. Questions like "how much inheritance should I leave my brother?" and so forth are answered, but they are not only given in person, they are given via satellite television just like the 700 Club, plus there is also phone, email and even fatwas delivered via text message.The Muslim world is beginning to see fatwa issuance as getting out of control and they are moving to have an official governing body to oversee all of Islam's fatwa edicts. But not everyone is in love with Dar Al-Iftaa...

Sheikh Safwat Hegazi's recent fatwa that it is halal to kill Israeli civilians on Egyptian land is one such potentially disastrous ruling; and arguably neither Dar Al-Iftaa's ruling to the contrary nor Sheikh Safwat's own retraction will render it entirely harmless. While acknowledging Hegazi's line of thinking as a response to frustration with Israel's aggression and impunity, Nosseir argued that with the correct interpretation of Qur'an , the fatwa is invalid, citing Verse 6 from Surat Al-Momtahena, to make her point: "Allah does not forbid you to deal justly and kindly with those who fought not against you on account of religion and did not drive you out of your homes. Verily Allah loves those who deal with equity."

To avoid such fatwas, Nosseir suggests drawing up a Dar Al-Iftaa committee of religious scholars from the various modern sciences as well as Sharia: "Different scientific spheres are now needed to substantiate a religious ruling." To meet on a monthly basis, such a committee would manage to cover a given topic from every conceivable angle, coming up with a solid statement: "So eventually we would be able to consolidate the value of the fatwa and make people more eager to follow it," she opined. "With all due respect to the early scholars of Islam, we need to look into our daily issues with a different eye, ijtihad [independent thought] in Islam is allowed till doom's day." Significantly, she appears to agree with El-Magdoub: "I think the people who should participate in such a committee should be volunteers, not commissioned and not paid, to ensure they are doing so out of their own free will. We strongly need such committee, but we lack the determination."Evidently, there exists in Islamic culture a need for fatwas that center around mindless killing. How they overcome this is anyone's guess.My money is on the use of a lot of bombs, guns and grenades.

The Fatwas Department is open seven days a week, from 10:30 am to 4:30 pm. Request a fatwa

Fatwas and religious quiries may be sent via the following methods:

In Person | See the Imams face to face in the ICC. They are located in offices 2,10,11 and 12. |

Email | Send your query to fatwas@iccuk.org |

On-Line | Filling in an online form using your Web browser |

Fax | Faxing your query on 020 7724 0493 |

Phone | The imams may be reached directly on the phone. Their tel nos are listed on the contacts page |

| Address questions to: The Fatwa Committee The Islamic Cultural Centre & The London Central Mosque 146 Park Road London NW8 7RG |

Please continue to support Blazing Cat Fur

Please continue to support BCF in the fight for free speech, commentary and open debate in Canada. Elements of our Government and Bureaucracy are consistently working to limit the voice of ordinary citizen's and set the agenda by which people can speak. Section 13(1)of the CHRA is the prime example of the Orwellian controls these people want to continue.

Realize this: They haven't come for you yet, but you are on the list.

Blazing Cat Fur: A Heartfelt Thanks To All For Your Support Of Blazingcatfur

Realize this: They haven't come for you yet, but you are on the list.

Blazing Cat Fur: A Heartfelt Thanks To All For Your Support Of Blazingcatfur

Statins Use in Presence of Elevated Liver Enzymes: What to Do?

The beneficial role of statins in primary and secondary prevention of coronary heart disease has resulted in their frequent use in clinical practice.

However, safety concerns, especially regarding hepatotoxicity, have driven multiple trials, which have demonstrated the low incidence of statin-related hepatic adverse effects. The most commonly reported hepatic adverse effect is the phenomenon known as transaminitis, in which liver enzyme levels are elevated in the absence of proven hepatotoxicity.

"Ttransaminitis" is usually asymptomatic, reversible, and dose-related.

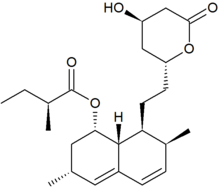

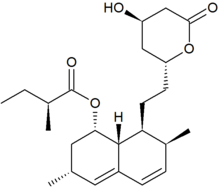

Lovastatin, a compound isolated from Aspergillus terreus, was the first statin to be marketed for lowering cholesterol. Image source: Wikipedia, public domain.

The increasing incidence of chronic liver diseases, including nonalcoholic fatty liver disease and hepatitis C, has created a new challenge when initiating statin treatment. These diseases result in abnormally high liver biochemistry values, discouraging statin use.

A PubMed/MEDLINE search of the literature (1994-2008) was performed for this Mayo Clinic Proceedings review. The review supports the use of statin treatment in patients with high cardiovascular risk whose elevated aminotransferase levels have no clinical relevance or are attributable to known stable chronic liver conditions.

References:

Statins in the Treatment of Dyslipidemia in the Presence of Elevated Liver Aminotransferase Levels: A Therapeutic Dilemma. Rossana M. Calderon, MD, Luigi X. Cubeddu, MD, Ronald B. Goldberg, MD and Eugene R. Schiff, MD. Mayo Clinic Proceedings April 2010 vol. 85 no. 4 349-356.

However, safety concerns, especially regarding hepatotoxicity, have driven multiple trials, which have demonstrated the low incidence of statin-related hepatic adverse effects. The most commonly reported hepatic adverse effect is the phenomenon known as transaminitis, in which liver enzyme levels are elevated in the absence of proven hepatotoxicity.

"Ttransaminitis" is usually asymptomatic, reversible, and dose-related.

Lovastatin, a compound isolated from Aspergillus terreus, was the first statin to be marketed for lowering cholesterol. Image source: Wikipedia, public domain.

The increasing incidence of chronic liver diseases, including nonalcoholic fatty liver disease and hepatitis C, has created a new challenge when initiating statin treatment. These diseases result in abnormally high liver biochemistry values, discouraging statin use.

A PubMed/MEDLINE search of the literature (1994-2008) was performed for this Mayo Clinic Proceedings review. The review supports the use of statin treatment in patients with high cardiovascular risk whose elevated aminotransferase levels have no clinical relevance or are attributable to known stable chronic liver conditions.

References:

Statins in the Treatment of Dyslipidemia in the Presence of Elevated Liver Aminotransferase Levels: A Therapeutic Dilemma. Rossana M. Calderon, MD, Luigi X. Cubeddu, MD, Ronald B. Goldberg, MD and Eugene R. Schiff, MD. Mayo Clinic Proceedings April 2010 vol. 85 no. 4 349-356.

Nima Arkani-Hamed: The Messenger Lectures

In 1964, Richard Feynman returned to Cornell University for a while and gave his Messenger Lectures that are available to you thanks to Bill Gates.

Now it's 2010 and the five Messenger Lectures were delivered by the world's leading particle phenomenologist, Nima Arkani-Hamed (IAS Princeton).

Here is the first one, from October 4th, 2010.

Press play to play.

All the lectures are available (5 times 90 minutes):

Hat tip: Nima ;-)

Now it's 2010 and the five Messenger Lectures were delivered by the world's leading particle phenomenologist, Nima Arkani-Hamed (IAS Princeton).

Here is the first one, from October 4th, 2010.

Press play to play.

All the lectures are available (5 times 90 minutes):

- Setting the stage: spacetime and quantum mechanics

- Our "Standard Models" of particle physics and cosmology

- Spacetime is doomed: what replaces it?

- Why is there a macroscopic universe?

- A new golden age of experiments: What might we know by 2020?

Hat tip: Nima ;-)

Al Gore's car idling during his talk

But you know, the folks in Gothenburg invited a speaker who doesn't give a damn about the environment or the laws, for that matter. That's why Al Gore left his car idling during his whole talk - for 3,600 seconds or so.

And he has the arrogance to talk about pollution. This hypocritical jerk should clearly be arrested at least for 60 years. But it seems that the laws no longer apply to him. He seems to be innocent because he also replaced the public transportation by a Swedish government jet he flew - which was actually illegal as well.

There is only one explanation why the laws don't apply to him: he is no longer a human being from the viewpoint of the law. Well, he is not human from my viewpoint, either. So let me ceremoniously strip him of the human rights now. Please feel free to deal with him accordingly.

Via Climate Depot, Sify, Prison Planet

LHC: ATLAS, CMS approach 50/pb of integrated luminosity

The major detectors CMS and ATLAS at the LHC are approaching 50 inverse picobarns of data each: the threshold could be surpassed today.

By now, each of them has recorded more than 3 trillion events. That's five time more than what we had at the beginning of this month! Indeed, the older version of the article, attached below, celebrated 10 inverse picobarns.

Fifty inverse picobarns is already a high enough integrated luminosity for many relatively sensible models of new physics (mostly SUSY) to show their fingerprint in the data. My estimated probability that 50/pb is enough to see "something new" is 30% but I haven't heard of any hints that this has indeed happened yet.

The collider will keep on colliding pairs of protons for a few more days - before it switches to heavy ions - so the year 2010 won't end too much above 50 inverse picobarns.

However, the current maximal initial luminosity (with 368 bunches) would be more than enough to reach the inverse femtobarn per detector well before the end of 2011 - which is one of the plans. (Most of the 40/pb were collected in the last week or two, not just in the last month.) And the luminosity may be increased more than that - perhaps by a factor of two or three. Clearly, it may be time for the LHC folks to make their 2011 plans slightly more ambitious when it comes to luminosity - or energy.

Click to zoom in

CMS has also started the "Fireworks Live" page (click!) where you can analyze the individual events. I (almost) randomly caught the event #116,633,520 from Run 149,294. (I skipped about 5 screens to get a slightly more exciting event.) If you think it is one of the most important events among the 3 trillion CMS collisions, and if you know what it means, let me know. ;-) However, don't try to look for the God particle on the picture. Here's the warning from the superiors:

Meanwhile, Tevatron's life was extended by 3 years; see HEPAP documents. A light Higgs could be seen and analyzed by the Tevatron before the LHC but in almost everything else, the Tevatron is vastly inferior already today.

Older version of this text:

Originally posted on October 5th, 2010

LHC: ATLAS, CMS surpass 10/pb of integrated luminosity

(Special comment for Tommaso Dorigo: if you don't like the term "recorded luminosity" for whatever rational or irrational reasons, please send your complaints to the ATLAS experiment that uses the term in the middle of their main web page. I am simply adopting the experts' terminology because it sounds sensible to me.)

Ten reciprocal picobarns could be enough to see some spectacular signatures of supersymmetry according to some papers such as

Konar et al.: How to look for supersymmetry under the lamppost at the LHCIn their particular paper, they assume light - but conceivably light (near the electroweak scale) - superpartner masses. For each ordering of the masses, they are able to find some characteristic processes - typically decay processes with up to 8 leptons in the final state. If Nature were generous enough, these spectacular events would have already been recorded.

To raise your mood, I have chosen the most optimistic paper. Its only citation so far, a paper by Altunkaynak et al., demands 100/pb instead of 10/pb for similar discoveries to be made.

The total number of inelastic collisions within the ATLAS detector has been 726 billion or so: we are approaching one trillion. Similarly for CMS (and LHCb). Also, the maximum instant luminosity has been raised to 69 inverse microbarns per second (69/μb/s). That translates to 2.18 inverse femtobarns per year (2.18/fb/yr).

Recall that this maximum initial luminosity has to reach around 5-6 inverse femtobarns per year to actually collect one inverse femtobarn of data (the goal) during the year 2011 (because of decreasing luminosity during runs, and because of interruptions). The LHC folks are only a factor of 2-3 from the instant luminosity from the 2011 dreams. The numerous, possibly insurmountable orders of magnitude that were discouraging us a few months ago have already been conquered.

I will be recycling and updating this entry - and moving it forward in time - throughout the month of October.

Thursday, October 28, 2010

Malawi: "Howling" Muslim students attending a Catholic school tear up New Testaments and throw them in the street

Funny, there will be no Christian riots. No streets blocked and piles of tires burned in faraway places. No protests to the U.N. to impose itself on other nations to stop criticism of Christianity. That proves it can be done: insults protested, but allowed to pass non-violently. Imagine that.

Jihad Watch

Jihad Watch

What's new in obstetrics and gynecology from UpToDate

35% of UpToDate topics are updated every four months. The editors select a small number of the most important updates and share them via "What's new" page. I selected the brief excerpts below from What's new in obstetrics and gynecology:

Influenza vaccination with inactivated vaccine is recommended for pregnant women, regardless of the stage of pregnancy. The 2010-2011 influenza vaccine is trivalent and includes antigens from both the 2009 pandemic H1N1 influenza virus and seasonal influenza viruses.

Use of acetaminophen during pregnancy was associated with a reduction in neural tube defects, as well as cleft lip/palate and gastroschisis. These data support the safety of acetaminophen for relief of fever and pain.

Gynecology

Like CA 125, human epididymal secretory protein E4 (HE4) is a promising biomarker for ovarian cancer. In contrast to CA 125, HE4 levels do not appear to be elevated in women with endometriosis, and thus can be useful to rule out ovarian cancer in patients with endometriosis and a pelvic mass suspected to be an endometrioma.

Sterilization does not impact sexual function. Sexual function appears to be unchanged or improved in women following tubal sterilization.

Botulinum toxin may be useful for overactive bladder syndrome (onabotulinumtoxinA, Botox®). Detrusor injection of botulinum toxin (BoNT) had a transient effect. The average time between injections was 8 to 12 months.

References:

What's new in obstetrics and gynecology. UpToDate.

Image source: Wikipedia, GNU Free Documentation License.

How can you not trade this?

VXX_60 Trend Model

VXX_240 Trend Model

VXX Daily Trend Model

Let me count the ways:

(1) Just trade VXX itself for Long & Short;

(2) Trade XXV (inverse VXX) for VXX-Shorts;

(3) Trade SPY or QQQQ inversely for VXX signals, i.e. SPY LONG for VXX SHORT signals.

Forget about "correlation with VIX," just look at the Trend Models.

XXV Daily Trend Model

A

The Economist on The Climate Fix: "Bright and Provocative"

The Economist has a very positive review of The Climate Fix. Here is how it starts:

THE title of this bright and provocative book is knowingly ambiguous; what sort of fix is it about? At least three distinct fixes, it turns out. There is fix-as-dilemma, fix-as-stitch-up and fix-as-solution. Roger Pielke, a professor of environmental studies at the University of Colorado, has useful things to say on all three fixes, and in so doing largely fulfils his aim of providing a guide to the perplexed.Read the entire review here.

Particles and signals moving backward in time

Mephisto has raised the issue of the Wheeler-Feynman absorber theory in particular and the influences that travel backward in time in general.

I remember having been excited about many similar topics when I was a teenager. Feynman and Wheeler were surely excited, too. But as physics was making its historical progress towards quantum field theory and beyond, most of the original reasons for excitement turned out to be misguided.

I remember having been excited about many similar topics when I was a teenager. Feynman and Wheeler were surely excited, too. But as physics was making its historical progress towards quantum field theory and beyond, most of the original reasons for excitement turned out to be misguided.

In some sense, each (independently thinking) physicist is following the footsteps of the history. So let's return to the early 20th century, or to the moment when I was 15 years old or so. Remember: these two moments are not identical - they're just analogous. ;-)

Divergences in classical physics

Newton's first conceptual framework was based on classical mechanics: coordinates x(t) of point masses obeyed differential equations. That was his version of a theory of everything. He had to make so many steps and he has extended the reach of science so massively that it wouldn't be shocking if he had believed that he had found a theory of everything. However, Newton was modest and he kind of appreciated that his theory was just "effective", using a modern jargon.

People eventually applied these laws to the motion of many particles that constituted a continuum (e.g. a liquid). Effectively, they invented or derived the concept of a field. Throughout the 19th century, most of the physicists - including the top ones such as James Clerk Maxwell - would still believe that Newton's framework was primary: there always had to be some "corpuscles" or atoms behind any phenomenon. There had to be some x(t) underlying everything.

As you know, these misconceptions forced the people to believe in the luminiferous aether, a hypothetical substance whose mechanical properties looked like the electromagnetic phenomena. The electromagnetic waves (including light) were thought to be quasi-sound waves in a new environment, the aether. This environment had to be able to penetrate anything. It had many other bizarre properties, too.

Hendrik Lorentz figured out that the empirical evidence for the electromagnetic phenomena only supported one electric vector E and one magnetic vector B at each point. A very simple environment, indeed. Everything else was derived. Lorentz explained how the vectors H, D are related to B, E in an environment.

Of course, people already knew Maxwell's equations but largely because Newton's framework was so successful and so imprinted in their bones, people assumed it had to be fundamental for everything. Fields "couldn't be" fundamental until 1905 when Albert Einstein, elaborating upon some confused and incomplete findings by Lorentz, realized that there was no aether. The electromagnetic field itself was fundamental. The vacuum was completely empty. It had to be empty of particles, otherwise the principle of relativity would have been violated.

This was a qualitative change of the framework. Instead of a finite number of observables "x(t)" - the coordinates of elementary particles - people suddenly thought that the world was described by fields such as "phi(x,y,z,t)". Fields depended both on space and time. The number of degrees of freedom grew by an infinite factor. It was a pretty important technical change but physics was still awaiting much deeper, conceptual transformations. (I primarily mean the switch to the probabilistic quantum framework but its epistemological features won't be discussed in this article.)

The classical field theory eventually became compatible with special relativity - Maxwell's equations were always compatible with it (as shown by Lorentz) even when people didn't appreciate this symmetry - and with general relativity.

However, in the 19th century, it wasn't possible to realistically describe matter in terms of fields. So people believed in some kind of a dual description whose basic degrees of freedom included both coordinates of particles as well as fields. These two portions of reality had to interact in both ways.

(To reveal it in advance, quantum field theory finally unified particles and fields. All particles became just quanta of fields. Some fields such as the Dirac field used to be known just as particles - electrons - while others were originally known as fields and not particles - such as photons that were appreciated later.)

However, the electromagnetic field induced by a charged point-like particle is kind of divergent. In particular, the electrostatic potential goes like "phi=Q/r" in certain units. The total energy of the field may either be calculated as the volume integral of "E^2/2", or as a volume integral of "phi.rho". The equivalence may be easily shown by integration by parts.

Whatever formula you choose, it's clear that point-like sources will carry an infinite energy. For example, the electrostatic field around the point-like source goes like "E=Q/r^2", its square (over two) is "E^2/2 = Q^2 / 2r^4", and this goes so rapidly to infinity for small "r" that even if you integrate it over volume, with the "4.pi.r^2.dr" measure, you still get an integral of "1/r^2" from zero to infinity. And it diverges as "1/r_0" where "r_0" is the cutoff minimum distance.

If you assume that the source is really point-like, "r_0=0", its energy is divergent. That was a very ugly feature of the combined theory of fields and point-like sources.

Because the classical theory based on particles and fields was otherwise so meaningful and convincing - a clear candidate for a theory of everything, as Lord Kelvin and others appreciated much more than Newton and much more than they should have :-) - it was natural to try to solve the last tiny problems with that picture. The divergent electrostatic self-energy was one of them.

You can try to remove it by replacing the point-like electrons with finite lumps of matter (non-point-like elementary objects), by adding non-linearities (some of them may create electron-like "solitons", smooth localized solutions), by the Wheeler-Feynman absorber theory, and by other ideas. However, all of these ideas may be seen to be redundant or wrong if you get to quantum field theory which really explains what's going on with these particles and interactions.

On the other hand, each of these defunct ideas has left some "memories" in the modern physics. While the ideas were wrong solutions to the original problem, they haven't quite disappeared.

First, one of the assumptions that was often accepted even by the physicists who were well aware of the quantum phenomena was that one has to "fix" the classical theory's divergences before one quantizes it. You shouldn't quantize a theory with problems.

This was an arbitrary irrational assumption that couldn't have been proved. The easiest way to see that there was no proof is to notice that the assumption is wrong and there can't be any proofs of wrong statements. ;-) The actual justification of this wrong assumption was that the quantization procedure was so new and deserved such a special protection that you shouldn't try to feed garbage into it. However, this expectation was wrong.

More technically, we can see that the quantum phenomena "kick in" long before the problems with the divergent classical self-energy become important. Why?

It's because, as we have calculated, the self-energy forces us to impose a cutoff "r_0" at the distance such that

The purely numerical factors such as "1/4.pi.epsilon_0" are omitted in the equation above. "Q" is the elementary electron charge.

At any rate, the distance "r_0" that satisfies the condition above is "Q^2/m", so it is approximately 137 times shorter than "1/m", than the Compton wavelength of the electron: recall the value of the fine-structure constant. This distance is known as the classical radius of the electron.

But because the fine-structure constant is (much) smaller than one, the classical radius of the electron is shorter than the Compton wavelength of the electron. So if you approach shorter distances, long before you get to the "structure of the electro" that is responsible for its finite self-energy, you hit the Compton wavelength (something in between the nuclear and atomic radius) where the quantum phenomena cannot be neglected.

So the electron's self-energy is a "more distant" problem than quantum mechanics. That's why you should first quantize the electron's electric field and then solve the problems with the self-energy. That's of course what quantum field theory does for you. It was premature to solve the self-energy problem in classical physics because in the real world, all of its technical features get completely modified by quantum mechanics.

This simple scaling argument shows that all the attempts to regulate the electron's self-energy in the classical theory were irrelevant for the real world, to say the least, and misguided, to crisply summarize their value. But let's look at some of them.

Born-Infeld model

One of the attempts was to modify physics by adding nonlinearities in the Lagrangian. The Born-Infeld model is the most famous example. It involves a square root of a determinant that replaces the simple "F_{mn}F^{mn}" term in the electromagnetic Lagrangian. This "F^2" term still appears if you Taylor-expand the square root.

Using a modern language, this model adds some higher-derivative terms that modify the short-distance physics in such a way that there's a chance that the self-energy will become finite. Don't forget that this whole "project proposal" based on classical field theory is misguided.

While this solution wasn't helpful to solve the original problem, the particular action is "sexy" in a certain way and it has actually emerged from serious physics - as the effective action for D-branes in string theory. But I want to look at another school, the Wheeler-Feynman absorber theory.

The Wheeler-Feynman absorber theory

The Wheeler-Feynman absorber theory starts with a curious observation about the time-reversal symmetry.

Both authors of course realized that the macroscopic phenomena are evidently irreversible. But the microscopic laws describing very simple objects - such as one electron - should be and are T-invariant. They don't change if you exchange the past and the future. This symmetry was easily seen in the equations of mechanics or electromagnetism (and its violations by the weak force were unknown at the time).

However, there exists a valid yet seemingly curious way to calculate the field around moving charged objects. It is called Liénard–Wiechert potentials.

A static charge has a "1/r" potential around it. But how do we calculate the field if the charge is moving? Well, the answer can actually be written down explicitly. The potential (including the vector potential "A") at a given moment "t" in time may be calculated as the superposition of the potentials induced by other charges in the Universe.

Each charge contributes something like "Q/r" to the potential at a given point - and it's multiplied by the velocity of the sources if they're nonzero and if you calculate the vector potential. But the funny feature is that you must look where the charges were in the past - exactly at the right moment such that the signal from those sources would arrive at the point "x, t" whose "phi, A" you're calculating, at the right moment, by the speed of light.

So the potential "phi(t)" (and "A(t)") depends on charge densities "rho(t-r/c)" (and the currents "j(t-r/c)" where "c" is the speed of light and "r" is the distance between the source and the probe. One can actually write this formula exactly. It's simple and it solves Maxwell's equation.

But you may see that this Ansatz for the solution violates the time-reversal symmetry. Why are we looking to the past and not to the future? Obviously, the field configuration where "t-r/c" is replaced by "t+r/c" is a solution, too. It's just a little bit counterintuitive.

Both of the expressions, the advanced and the retarded ones, solve Maxwell's equations with the same sources. That's not too shocking because their difference solves Maxwell's equations with no sources. While this electromagnetic field (the difference) has no sources, it still depends on the trajectories of charged particles - it projects "past minus future" light cones around every point that the charged particle ever visited.

If you look at it rationally, the difference between the two ways how to write the solution is just a subtlety about the way how to find the solutions. The "homogeneous" part of the solution to the differential equation is ultimately determined by the initial conditions for the electromagnetic field.

But Wheeler and Feynman believed that some subtlety about the combination of retarded and advanced solutions was the right mechanism that Nature uses to get rid of the divergent self-energy. As far as I can say, this argument of theirs has never made any sense. Even in the classical theory, there would still be a divergent integral of "E^2" I discussed previously. So in the best case, they found a method how to isolate the divergent part and how to subtract it - a classical analogue of the renormalization procedure in quantum field theory.

However, these Wheeler-Feynman games soon made Feynman look at quantum mechanics from a "spacetime perspective". This spacetime perspective ultimately made Lorentz invariance much more manifest than it was in Heisenberg's and especially Schrödinger's picture. Moreover, the resulting path integrals and Feynman diagrams contains several memories of the Wheeler-Feynman original "retrocausal" motivation:

Quite on the contrary. Calculations that are not time-reversal symmetric are very useful. And macroscopic phenomena that violate the time-reversal symmetry are no exceptions: they're the rule because of the second law of thermodynamics. Obviously, this law applies to all systems with a macroscopically high entropy and electromagnetic waves are no exception. In this context, the second law makes the waves diffuse everywhere.

The point about the breaking of symmetries by physics and calculations is often misunderstood by the laymen (including those selling themselves as top physicists). Many people think that if a theory has a symmetry, every history allowed by the theory must have the same symmetry and/or every calculation of anything we ever make is obliged to preserve the symmetry. This nonsensical opinion lies at the core of the ludicrous statements e.g. that "string theory is not background independent".

Retrocausality of the classical actions

Feynman's path integral formalism may also be viewed as the approach to quantum mechanics that treats the whole spacetime as a single entity. The slices of spacetime at a fixed "t" don't play much role in this approach. That's also why two major Feynman's path integral papers have the following titles:

However, if you analyze what the principle means mathematically, you will find out that it is exactly equivalent to differential equations for "x(t)", to a variation of the well-known "F=ma" laws. You need to know the mathematical tricks that Lagrange has greatly improved. But if you learn this variational calculus, the equivalence will be clear to you.

So while the principle "linguistically" makes the present depend both on the past and the future - here I define a linguist as a superficial person who gets easily confused by what the words "seem" to imply - the correct answer is that the laws described by the principle of least action actually don't make the present depend on the future.

The situation is analogous at the quantum level, too. You may sum amplitudes for histories that occur in the whole spacetime. But the local observations will always be affected just by the facts about their neighborhood in space and in time. To know whether there's any acausality or non-locality in physics, you have to properly calculate it. The answer of quantum field theory is zero.

Self-energy in quantum field theory

The picture that got settled in quantum field theory - and of course, string theory doesn't really change anything about it - is that the time-reversal symmetry always holds exactly at the fundamental level; antiparticles may be related to holes in the sea of particles with negative energy, which is equivalent to their moving backward in time (creation is replaced by annihilation).

And what about the original problem of self-energy? It has reappeared in the quantum formalism but it was shown not to spoil any physical predictions.

Various integrals that express Feynman diagrams are divergent but the divergences are renormalized. All of the predictions may ultimately be unambiguously calculated by setting a finite number of types of divergent integrals to the experimentally measured values (mass of the electron, the fine-structure constant, the vanishing mass of the photon, the negligible vacuum energy density, etc.).

Equivalently, you may also think of the removal of the divergent contributions as an effect of the "counterterms". The energy of some objects simply gets a new, somewhat singularly looking contribution. When you add it to the divergent "integral of squared electric field", the sum will be finite.

In some sense, you don't have to invent a story "where the canceling term comes from". What matters is that you can add the relevant terms to the action - and you have to add them to agree with the most obvious facts about the observations e.g. that the electron mass is finite.

There are different ways how to "justify" that the infinite pieces are removed or canceled by something else. But if you still need lots of these "justifications", you either see inconsistencies that don't really exist; or you are not thinking about these matters quite scientifically. Why?

Because science dictates that it's the agreement of the predictions with the observations that all justifications have ultimately boil down to. And indeed, the robust computational rules of quantum field theory - with a finite number of parameters - can correctly predict pretty much all experiments ever done with elementary particles. So if you find its rules counterintuitive or ugly, there exists a huge empirical evidence that your intuition or sense of beauty is seriously impaired. It doesn't matter what your name is and how pretty you are, you're wrong.

Maybe, if you will spend a little bit more time with the actual rules of quantum field theory, you may improve both your intuition and your aesthetic sense.

However, the insights about the Renormalization Group in the 1970s, initiated by Ken Wilson, brought a new, more philosophically complete, understanding what was going on with the renormalization. It was understood that the long-distance limit of many underlying theories is often universal and described by "effective quantum field theories" that usually have a finite number of parameters ("deformations") only.

The calculations that explicitly subtract infinities to get finite results are just the "simplest" methods to describe the long-distance physical phenomena in any theory belonging to such a universality class. In this "simplest" approach, it is just being assumed that the "substructure that regulates things" appears at infinitesimally short distances. The long-distance physics won't depend on the short-distance details - an important point you must appreciate. It really means that 1) you don't need the unknown short-distance physics, 2) you can't directly deduce the unknown short-distance physics from the observations, 3) you may still believe that the short-distance physics would match your sense of beauty.

You can still ask: what is the actual short-distance physics? As physics became fully divided according to the distance/energy scale in the 1970s, people realized that to address questions at shorter distances, you need to understand processes at higher energies (per particle) - either experimentally or theoretically. So it's getting hard.

But it's true that the only "completions" of physics of divergent quantum field theories that are known to mankind are quantum field theories and string theory (the latter is necessary when gravity in 3+1 dimensions or higher is supposed to be a part of the picture). Any statement that a "new kind of a theory" (beyond these two frameworks) that could reduce to the tested effective quantum field theories at long distances is extremely bold and requires extraordinary evidence.

In some sense, each (independently thinking) physicist is following the footsteps of the history. So let's return to the early 20th century, or to the moment when I was 15 years old or so. Remember: these two moments are not identical - they're just analogous. ;-)

Divergences in classical physics

Newton's first conceptual framework was based on classical mechanics: coordinates x(t) of point masses obeyed differential equations. That was his version of a theory of everything. He had to make so many steps and he has extended the reach of science so massively that it wouldn't be shocking if he had believed that he had found a theory of everything. However, Newton was modest and he kind of appreciated that his theory was just "effective", using a modern jargon.

People eventually applied these laws to the motion of many particles that constituted a continuum (e.g. a liquid). Effectively, they invented or derived the concept of a field. Throughout the 19th century, most of the physicists - including the top ones such as James Clerk Maxwell - would still believe that Newton's framework was primary: there always had to be some "corpuscles" or atoms behind any phenomenon. There had to be some x(t) underlying everything.

As you know, these misconceptions forced the people to believe in the luminiferous aether, a hypothetical substance whose mechanical properties looked like the electromagnetic phenomena. The electromagnetic waves (including light) were thought to be quasi-sound waves in a new environment, the aether. This environment had to be able to penetrate anything. It had many other bizarre properties, too.

Hendrik Lorentz figured out that the empirical evidence for the electromagnetic phenomena only supported one electric vector E and one magnetic vector B at each point. A very simple environment, indeed. Everything else was derived. Lorentz explained how the vectors H, D are related to B, E in an environment.

Of course, people already knew Maxwell's equations but largely because Newton's framework was so successful and so imprinted in their bones, people assumed it had to be fundamental for everything. Fields "couldn't be" fundamental until 1905 when Albert Einstein, elaborating upon some confused and incomplete findings by Lorentz, realized that there was no aether. The electromagnetic field itself was fundamental. The vacuum was completely empty. It had to be empty of particles, otherwise the principle of relativity would have been violated.

This was a qualitative change of the framework. Instead of a finite number of observables "x(t)" - the coordinates of elementary particles - people suddenly thought that the world was described by fields such as "phi(x,y,z,t)". Fields depended both on space and time. The number of degrees of freedom grew by an infinite factor. It was a pretty important technical change but physics was still awaiting much deeper, conceptual transformations. (I primarily mean the switch to the probabilistic quantum framework but its epistemological features won't be discussed in this article.)

The classical field theory eventually became compatible with special relativity - Maxwell's equations were always compatible with it (as shown by Lorentz) even when people didn't appreciate this symmetry - and with general relativity.

However, in the 19th century, it wasn't possible to realistically describe matter in terms of fields. So people believed in some kind of a dual description whose basic degrees of freedom included both coordinates of particles as well as fields. These two portions of reality had to interact in both ways.

(To reveal it in advance, quantum field theory finally unified particles and fields. All particles became just quanta of fields. Some fields such as the Dirac field used to be known just as particles - electrons - while others were originally known as fields and not particles - such as photons that were appreciated later.)

However, the electromagnetic field induced by a charged point-like particle is kind of divergent. In particular, the electrostatic potential goes like "phi=Q/r" in certain units. The total energy of the field may either be calculated as the volume integral of "E^2/2", or as a volume integral of "phi.rho". The equivalence may be easily shown by integration by parts.

Whatever formula you choose, it's clear that point-like sources will carry an infinite energy. For example, the electrostatic field around the point-like source goes like "E=Q/r^2", its square (over two) is "E^2/2 = Q^2 / 2r^4", and this goes so rapidly to infinity for small "r" that even if you integrate it over volume, with the "4.pi.r^2.dr" measure, you still get an integral of "1/r^2" from zero to infinity. And it diverges as "1/r_0" where "r_0" is the cutoff minimum distance.

If you assume that the source is really point-like, "r_0=0", its energy is divergent. That was a very ugly feature of the combined theory of fields and point-like sources.

Because the classical theory based on particles and fields was otherwise so meaningful and convincing - a clear candidate for a theory of everything, as Lord Kelvin and others appreciated much more than Newton and much more than they should have :-) - it was natural to try to solve the last tiny problems with that picture. The divergent electrostatic self-energy was one of them.

You can try to remove it by replacing the point-like electrons with finite lumps of matter (non-point-like elementary objects), by adding non-linearities (some of them may create electron-like "solitons", smooth localized solutions), by the Wheeler-Feynman absorber theory, and by other ideas. However, all of these ideas may be seen to be redundant or wrong if you get to quantum field theory which really explains what's going on with these particles and interactions.

On the other hand, each of these defunct ideas has left some "memories" in the modern physics. While the ideas were wrong solutions to the original problem, they haven't quite disappeared.

First, one of the assumptions that was often accepted even by the physicists who were well aware of the quantum phenomena was that one has to "fix" the classical theory's divergences before one quantizes it. You shouldn't quantize a theory with problems.

This was an arbitrary irrational assumption that couldn't have been proved. The easiest way to see that there was no proof is to notice that the assumption is wrong and there can't be any proofs of wrong statements. ;-) The actual justification of this wrong assumption was that the quantization procedure was so new and deserved such a special protection that you shouldn't try to feed garbage into it. However, this expectation was wrong.

More technically, we can see that the quantum phenomena "kick in" long before the problems with the divergent classical self-energy become important. Why?

It's because, as we have calculated, the self-energy forces us to impose a cutoff "r_0" at the distance such that

Q^2 / r0 = m.I have set "hbar=c=1", something that would be easy and common for physicists starting with Planck, and I allowed the self-energy to actually produce the correct rest mass of the electron, "m". (There were confusions about a factor of 3/4 in "E=mc^2" for a while, confusions caused by the self-energy considerations, but let's ignore this particular historical episode because it doesn't clarify too much about the correct picture.)

The purely numerical factors such as "1/4.pi.epsilon_0" are omitted in the equation above. "Q" is the elementary electron charge.

At any rate, the distance "r_0" that satisfies the condition above is "Q^2/m", so it is approximately 137 times shorter than "1/m", than the Compton wavelength of the electron: recall the value of the fine-structure constant. This distance is known as the classical radius of the electron.

But because the fine-structure constant is (much) smaller than one, the classical radius of the electron is shorter than the Compton wavelength of the electron. So if you approach shorter distances, long before you get to the "structure of the electro" that is responsible for its finite self-energy, you hit the Compton wavelength (something in between the nuclear and atomic radius) where the quantum phenomena cannot be neglected.

So the electron's self-energy is a "more distant" problem than quantum mechanics. That's why you should first quantize the electron's electric field and then solve the problems with the self-energy. That's of course what quantum field theory does for you. It was premature to solve the self-energy problem in classical physics because in the real world, all of its technical features get completely modified by quantum mechanics.

This simple scaling argument shows that all the attempts to regulate the electron's self-energy in the classical theory were irrelevant for the real world, to say the least, and misguided, to crisply summarize their value. But let's look at some of them.

Born-Infeld model

One of the attempts was to modify physics by adding nonlinearities in the Lagrangian. The Born-Infeld model is the most famous example. It involves a square root of a determinant that replaces the simple "F_{mn}F^{mn}" term in the electromagnetic Lagrangian. This "F^2" term still appears if you Taylor-expand the square root.

Using a modern language, this model adds some higher-derivative terms that modify the short-distance physics in such a way that there's a chance that the self-energy will become finite. Don't forget that this whole "project proposal" based on classical field theory is misguided.

While this solution wasn't helpful to solve the original problem, the particular action is "sexy" in a certain way and it has actually emerged from serious physics - as the effective action for D-branes in string theory. But I want to look at another school, the Wheeler-Feynman absorber theory.

The Wheeler-Feynman absorber theory

The Wheeler-Feynman absorber theory starts with a curious observation about the time-reversal symmetry.

Both authors of course realized that the macroscopic phenomena are evidently irreversible. But the microscopic laws describing very simple objects - such as one electron - should be and are T-invariant. They don't change if you exchange the past and the future. This symmetry was easily seen in the equations of mechanics or electromagnetism (and its violations by the weak force were unknown at the time).

However, there exists a valid yet seemingly curious way to calculate the field around moving charged objects. It is called Liénard–Wiechert potentials.

A static charge has a "1/r" potential around it. But how do we calculate the field if the charge is moving? Well, the answer can actually be written down explicitly. The potential (including the vector potential "A") at a given moment "t" in time may be calculated as the superposition of the potentials induced by other charges in the Universe.

Each charge contributes something like "Q/r" to the potential at a given point - and it's multiplied by the velocity of the sources if they're nonzero and if you calculate the vector potential. But the funny feature is that you must look where the charges were in the past - exactly at the right moment such that the signal from those sources would arrive at the point "x, t" whose "phi, A" you're calculating, at the right moment, by the speed of light.

So the potential "phi(t)" (and "A(t)") depends on charge densities "rho(t-r/c)" (and the currents "j(t-r/c)" where "c" is the speed of light and "r" is the distance between the source and the probe. One can actually write this formula exactly. It's simple and it solves Maxwell's equation.

But you may see that this Ansatz for the solution violates the time-reversal symmetry. Why are we looking to the past and not to the future? Obviously, the field configuration where "t-r/c" is replaced by "t+r/c" is a solution, too. It's just a little bit counterintuitive.

Both of the expressions, the advanced and the retarded ones, solve Maxwell's equations with the same sources. That's not too shocking because their difference solves Maxwell's equations with no sources. While this electromagnetic field (the difference) has no sources, it still depends on the trajectories of charged particles - it projects "past minus future" light cones around every point that the charged particle ever visited.

If you look at it rationally, the difference between the two ways how to write the solution is just a subtlety about the way how to find the solutions. The "homogeneous" part of the solution to the differential equation is ultimately determined by the initial conditions for the electromagnetic field.

But Wheeler and Feynman believed that some subtlety about the combination of retarded and advanced solutions was the right mechanism that Nature uses to get rid of the divergent self-energy. As far as I can say, this argument of theirs has never made any sense. Even in the classical theory, there would still be a divergent integral of "E^2" I discussed previously. So in the best case, they found a method how to isolate the divergent part and how to subtract it - a classical analogue of the renormalization procedure in quantum field theory.

However, these Wheeler-Feynman games soon made Feynman look at quantum mechanics from a "spacetime perspective". This spacetime perspective ultimately made Lorentz invariance much more manifest than it was in Heisenberg's and especially Schrödinger's picture. Moreover, the resulting path integrals and Feynman diagrams contains several memories of the Wheeler-Feynman original "retrocausal" motivation:

- the Feynman propagators, extending the retarded and advanced electromagnetic potentials, are half-retarded, half-advanced (a semi-retrocausal prescription)

- antiparticles in Feynman diagrams are just particles with negative energies that propagate backwards in time

Quite on the contrary. Calculations that are not time-reversal symmetric are very useful. And macroscopic phenomena that violate the time-reversal symmetry are no exceptions: they're the rule because of the second law of thermodynamics. Obviously, this law applies to all systems with a macroscopically high entropy and electromagnetic waves are no exception. In this context, the second law makes the waves diffuse everywhere.

The point about the breaking of symmetries by physics and calculations is often misunderstood by the laymen (including those selling themselves as top physicists). Many people think that if a theory has a symmetry, every history allowed by the theory must have the same symmetry and/or every calculation of anything we ever make is obliged to preserve the symmetry. This nonsensical opinion lies at the core of the ludicrous statements e.g. that "string theory is not background independent".

Retrocausality of the classical actions

Feynman's path integral formalism may also be viewed as the approach to quantum mechanics that treats the whole spacetime as a single entity. The slices of spacetime at a fixed "t" don't play much role in this approach. That's also why two major Feynman's path integral papers have the following titles:

Space-time approach to non-relativistic quantum mechanicsThis "global" or "eternalist" perspective also exists in the classical counterpart of Feynman's formulation, namely in the principle of least action. Mephisto has complained that the principle is "retrocausal" - that you're affected by the future. Why? The principle says:

Space-time approach to quantum electrodynamics

The particle (or another system) is just going to move in such a way that the action evaluated between a moment in the past and a moment in the future is extremized among all trajectories with the same endpoints.At the linguistic level, this principle requires you to know lots about the particle's behavior in the past as well as in the future if you want to know what the particle is going to do right now. Such a dependence on the future would violate causality, the rule that the present can only be affected by the past but not by the future.

However, if you analyze what the principle means mathematically, you will find out that it is exactly equivalent to differential equations for "x(t)", to a variation of the well-known "F=ma" laws. You need to know the mathematical tricks that Lagrange has greatly improved. But if you learn this variational calculus, the equivalence will be clear to you.

So while the principle "linguistically" makes the present depend both on the past and the future - here I define a linguist as a superficial person who gets easily confused by what the words "seem" to imply - the correct answer is that the laws described by the principle of least action actually don't make the present depend on the future.