The sloppiness on John Cook's blog is unlikely to be coincidental

In this text, I would like to settle the question what is the climate sensitivity - warming attributed to CO2 doubling - from the observed CO2 concentrations and global mean temperatures from 1850. At the very beginning, let me tell you that the result will be 1.66 °C if all observed global warming were due to the CO2 growth.

This text will be vastly more accurate and less sketchy than a text by Keith Pickering about the

climate zombies (us) at John Cook's blog.

Growing CO2 concentrationFirst, let me offer you an excellent Ansatz for the concentration of CO2 in the year "year" during the industrial era. We won't usually need it but it's simply:

conc(year) = 280 ppm + 22.3 ppm * exp[(year-1920) / 57]

You see that for a very small year, like 1776, the concentration is close to 280 ppm. For the year 1920, it is chosen to be 280+22.3 = 302.3 ppm, and the deviation from 280 ppm is exponentially growing with 57 years being the e-folding time (the time in which the deviation increases 2.718 times).

You may also calculate that in the year 2010, the formula predicts 388 ppm and the current annual growth of the CO2 concentration - e.g. the difference between 2009 and 2010 - is around 1.88 ppm per year. As you can see, the formula behaves very sensibly in the past (recent decades and centuries) and in the present.

However, the formula also predicts a continuation of the exponential growth in the future which I find very unlikely. For the year 2100, it predicts about 805 ppm while a more realistic estimate is below 600 ppm because the annual growth is somewhat likely to remain below 2 ppm per year. So we must be careful that the CO2 formula for the concentration is very accurate and established up to the present but it may deviate in the future.

Formula for the greenhouse warmingAnother formula I will be using is the climate-model-based quasi-logarithmic function that calculates the no-feedback warming induced by an increase of the CO2 concentration. The relevant functions can be found in the IPCC report and at other places:

tempPREP[conc_] := Log[1 + 1.2 conc + 0.005 conc2 + 0.0000014 conc3];

temp[conc_] := tempPREP[conc] - tempPREP[280];

The "tempPREP" function is OK up to an additive shift; the additive shift in "temp" is chosen in such a way that "temp=0" for "conc=280 ppm" - a pre-industrial base line. You see that the function is essentially a logarithm. However, to be more accurate, the argument of the logarithm contains some nonlinear terms in the concentration. It's still true that the warming induced by the increase from 280 ppm to 560 ppm is about 1.2 °C:

temp[560] = 1.186 °C.

The logarithm in the formula above is natural and you need to be careful about the bases as well as about the nonlinear terms to get the right figure.

This figure contained no feedbacks. I will assume that the feedbacks act in such a way that they simply multiply "temp[conc]" by a universal coefficient (and add a universal additive shift).

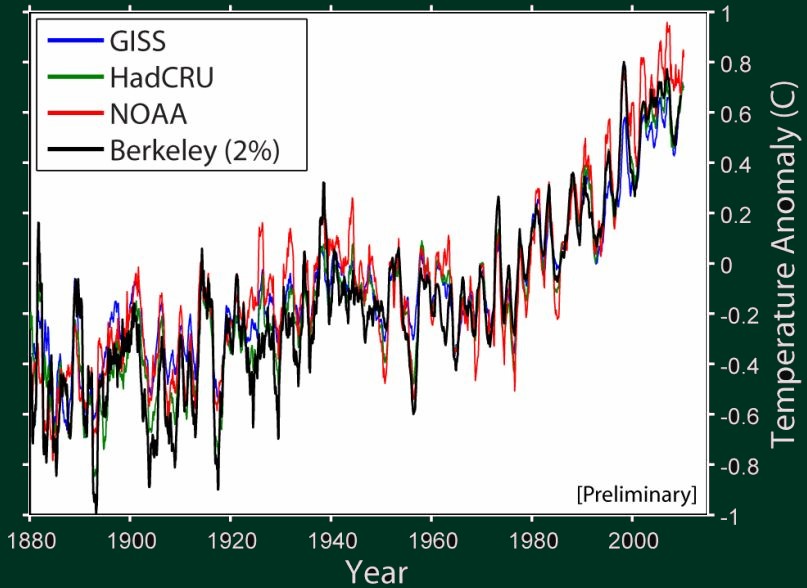

Fine, so take all the HadCRUT3 annual global temperatures from 1850 to 2010 and draw a simple graph: on the x-axis, you will have the temperature increase "temp[conc[year]]" predicted by the no-feedback formula above (whose climate sensitivity is 1.186 °C); on the y-axis, you will have the actual temperature anomaly from the HadCRUT3 tables. The graph will look like this:

The data points are not far from a straight line. On the other hand, the interpolation is not perfect: some low-concentration data points (those on the left) deviate from the predicted temperature by as much as 0.7 °C. However, the correlation coefficient is obviously rather high. The linear regression gives you

Had anomaly [year] = -0.48 °C + 1.40 * temp[conc[year]]

The absolute term -0.48 °C depends on the HadCRUT3 baseline; what is important is the coefficient 1.40 that gives us the amplification by feedbacks, as extracted from the actual observed CO2 and HadCRUT3 temperature data, while attributing all the temperature changes to CO2. Because the coefficients exceeds 1.0, the data interpreted in this way imply a weak positive feedback.

We have extracted the best linear fit from the observed data and it tells us that

warming [conc_] = 1.40 * temp[concentration]

where the function "temp", involving a logarithm, was defined at the top. In particular,

warming [280 ppm] = 0 °C,

warming [560 ppm] = 1.66 °C.

The first line verifies that the base line of "temp" was chosen at the "average" pre-industrial temperature; the second line is the total warming you obtain from the CO2 doubling, and it is 1.66 °C. Once again, this figure was obtained by assuming that

all warming observed since 1850 should be attributed to CO2.

The text on John Cook's blog - either because of sloppiness or deliberately - used a linear prescription for the warming as a function of the concentration instead of the more realistic, IPCC-like quasi-logarithmic function. This mistake has of course enhanced the warming predicted for higher concentrations (on the right side from the data points) and their result was 2.38 °C, compatible with the IPCC interval. However, the actual result you get from this procedure is 1.66 °C and it is below the lower end point of the IPCC interval.

By their sloppiness and/or errors, they overestimated the correct result of their very method by 43 percent. See the first fast comments for more remarks about this point.

We may also calculate that

warming [389 ppm] = 0.76 °C

which is the warming we should have received so far according to the optimized logarithmic formula. It follows that 1.66 - 0.76 = 0.9 °C of warming would be left until the moment of reaching 560 ppm which I expect to be pretty close to the year 2100.

Instead of 0.9 °C, John Cook's blog produces the figure 0.0085*170 = 1.45 °C for this very question - which is an overestimate by stunning 60 percent. We're not even arguing whether the methodology makes sense; if you just fix the functions by correcting a simple mistake they're demonstrably aware of (and they make fun of), you will get a hugely different result than they have obtained.

Again, let me emphasize that these expected figures for the warming (I mean my results, the correct ones) are likely to be overestimates because I/we have assumed that the whole observed warming could be represented as the effect of CO2 plus "noise". However, it's more likely that other climate drivers have contributed by temperature changes of the same sign as the observed total ones, so the effect of CO2 was smaller - and it's only the effect of CO2 that may be extrapolated. For example, it's pretty clear that it's been warming since the little ice age. If 1/2 of the warming were caused by non-CO2 effects, all the predictions for CO2-induced would have to be divided by two.

Clearly, even if you attribute everything to CO2, a warming by 1 °C per century would still represent no threat in any sense. After all, we have tried it in the 20th century, too.

Finally, let me mention that even if the CO2 emissions continued to

exponentially increase for another century, in agreement with the formula at the very top of this blog entry, we would reach 805 ppm in the year 2100. The corresponding warming since the pre-industrial era would be

warming [805 ppm] = 2.62 °C

or 2.62-0.76 = 1.86 °C from 2010. That's hardly a reason for concern - and it is the very maximum number that you may conceivably get by such manipulations. It assumes that the consumption of fossil fuels will continue to grow exponentially for another century, and that all the observed "systematic" temperature change in the last 160 years may be attributed to CO2.

Of course, you may invent huge conspiracy theories - that most of the warming so far was masked by some cooling source that will suddenly end, or something like that. Such claims are extremely unlikely especially because we know that after the little ice age, it was getting warmer even without any CO2 growth. Of course, the more nutty your conspiracy theory will be, the further from the numbers above you may get.